Both information warehouses and data lakes can hold large quantities of data for analysis. As you may remember, information storage facilities include curated, structured information, have a predesigned schema that is used when the data is written, call on large quantities of CPU, SSDs, and RAM for speed, and are meant for usage by organization analysts. Data lakes hold even more data that can be unstructured or structured, initially saved raw and in its native format, usually utilize cheap spinning disks, use schemas when the information reads, filter and transform the raw information for analysis, and are meant for usage by information engineers and information researchers initially, with business analysts able to use the information once it has actually been curated.

Information lakehouses, such as the topic of this review, Dremio, bridge the space in between data warehouses and data lakes. They start with an information lake and add quick SQL, a more effective columnar storage format, an information brochure, and analytics.Dremio explains its item as a data lakehouse platform for teams that understand and like SQL. Its selling points are SQL for everybody, from service

- user to data engineer; Fully handled, with minimal software application and information

- upkeep; Assistance for any data, with the ability to

- consume data into the lakehouse or query in location; and No lock-in, with the flexibility to use

- any engine today and tomorrow. According to Dremio, cloud data

warehouses such as Snowflake, Azure Synapse, and Amazon Redshift generate lock-in since the data is inside the storage facility. I do not entirely agree with this, but I do agree that it’s actually tough to move big amounts of data from one cloud system to another.Also according to Dremio, cloud information lakes such as Dremio and Glow offer more versatility since the information is stored where several engines can utilize it. That’s true. Dremio declares 3 benefits that derive from this: Flexibility to use numerous best-of-breed engines on the same information and use cases; Easy to adopt additional engines today; and Easy to embrace brand-new engines in the future, simply point them at the data. Competitors to Dremio include the Databricks Lakehouse Platform, Ahana Presto, Trino( formerly Presto SQL), Amazon Athena, and open-source Apache Glow. Less direct competitors are information warehouses that support external tables, such as Snowflake and Azure Synapse. Dremio has painted all business information warehouses as their competitors, but I dismiss that as marketing, if not actual buzz. After all, data lakes and information warehouses fulfill various usage cases and serve different users, although data lakehouses at least partly

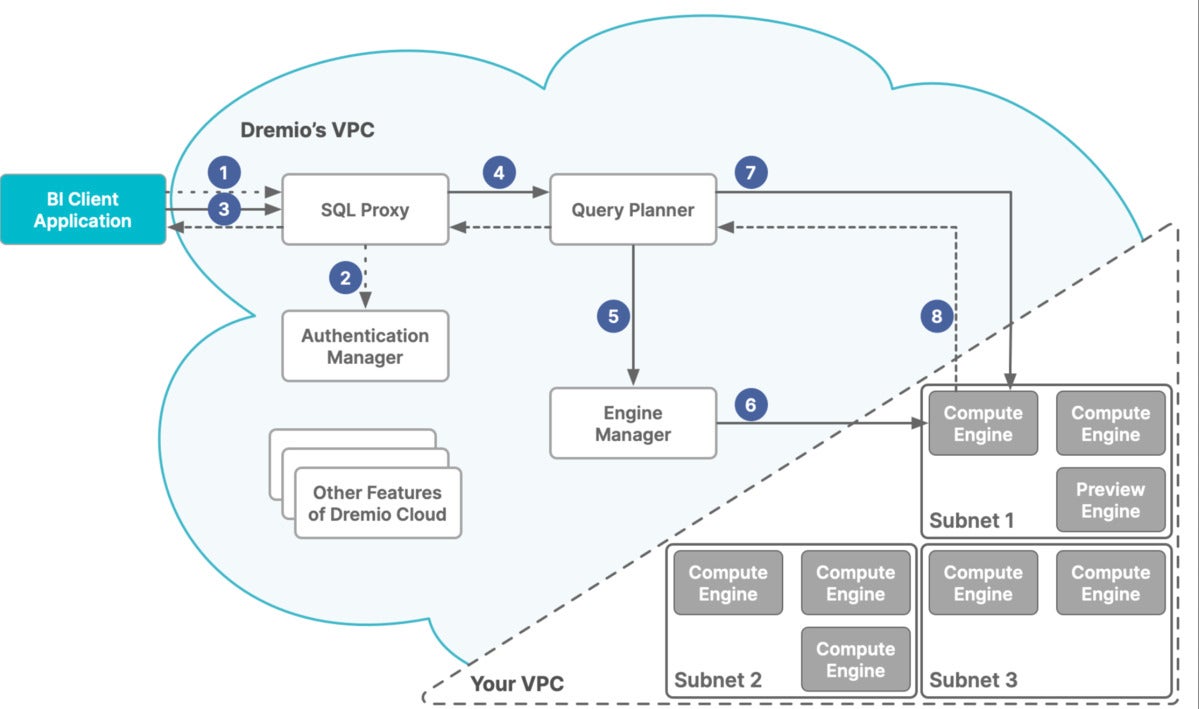

cover the two categories.Dremio Cloud summary Dremio server software application is a Java information lakehouse application for Linux that can be deployed on Kubernetes clusters, AWS, and Azure. Dremio Cloud is essentially the Dremio server software running as a fully handled service on AWS. Dremio Cloud’s functions are divided between virtual personal clouds(VPCs), Dremio’s and yours, as shown in thediagram below. Dremio’s VPC acts as the control plane. Your VPC functions as an execution plane. If you use multiple cloud accounts with Dremio Cloud, each VPC acts as an execution plane.The execution

aircraft holds numerous clusters, called calculate engines. The control airplane processes SQL queries with the Finder question engine and sends them through an engine manager, which dispatches them to a proper compute engine based upon your rules.Dremio claims sub-second reaction times with “reflections,”which are enhanced materializations of source data or queries, similar to emerged views. Dremio declares raw speed that’s 3x much faster than Trino (an application of the Presto SQL engine )thanks to Apache Arrow, a standardized column-oriented memory format. Dremio also claims, without specifying a

point of contrast, that information engineers can ingest, transform, and provision information in a portion of the time thanks to SQL DML, dbt, and Dremio’s semantic layer.Dremio has no organization intelligence, machine learning, or deep learning capabilities of its own, however it has chauffeurs and adapters that support BI, ML, and DL software application, such as Tableau, Power BI, and Jupyter Notebooks. It can also connect to information sources in tables in lakehouse storage and in external relational databases.< img alt ="dremio cloud 01 "width= "1200"height="709" src=" https://images.idgesg.net/images/article/2022/08/dremio-cloud-01-100931826-large.jpg?auto=webp&quality=85,70 "/ > IDG Dremio Cloud is split into 2 Amazon virtual private clouds(VPCs). Dremio’s VPC hosts the control aircraft, including the SQL processing. Your VPC hosts the execution plane, which contains the compute engines. Dremio Arctic introduction Dremio Arctic is an intelligent metastore for Apache Iceberg, an open table format for huge analytic datasets, powered by Nessie, a native Apache Iceberg catalog. Arctic supplies a contemporary , cloud-native alternative to Hive Metastore,

and is supplied by Dremio as a forever-free service. Arctic uses the following capabilities: Git-like information management: Brings Git-like variation control to data lakes, making it possible for data engineers to handle the data lake with the very same finest practices Git enables for software application development, consisting of dedicates, tags, and branches. Information optimization(coming  soon

soon

): Instantly preserves and enhances information to make it possible for faster processing and lower the manual effort involved in managing a lake. This consists of guaranteeing that the information is columnarized, compressed, compacted(for bigger files), and separated properly when data and schemas are updated. Works with all engines: Supports all Apache Iceberg-compatible technologies, consisting of inquiry engines (Dremio Finder, Presto, Trino, Hive), processing engines(Glow ), and streaming engines(Flink ). Dremio data file formats Much of the performance and performance of Dremio depends upon the disk and memory information file formats used.Apache Arrow Apache Arrow, which was developed by Dremio and added to open source, specifies a language-independent columnar memory format for flat and hierarchical information, organized for efficient analytic operations on modern hardware like CPUs and GPUs.

SQL tables to huge information, while making it possible for engines such as Finder, Spark, Trino, Flink, Presto, Hive, and Impala to safely deal with the very same

tables, at the same

time. Icebergsupports flexible SQL commands to combine brand-new information, upgrade existing rows, and carry out targeted deletes.Apache Parquet Apache Parquet is an open-source, column-oriented information file format developed for effective information storage and retrieval. It offers efficient information compression and encoding plans with enhanced efficiency to manage intricate information in bulk.Apache Iceberg vs. Delta Lake According to Dremio

, the Apache Iceberg data file format was produced by Netflix, Apple, and other tech powerhouses, supports INSERT/UPDATE/DELETE with any engine, and has strong momentum in the open-source neighborhood. By contrast, once again according to Dremio, the Delta Lake data submit format was produced by Databricks, supports INSERT/UPDATE with Glow and SELECT with any SQL inquiry engine, and is primarily used in combination with Databricks.The Delta Lake documents on GitHub pleads to differ. For example, there is a connector that enables Trino to read and compose Delta Lake files, and a library that

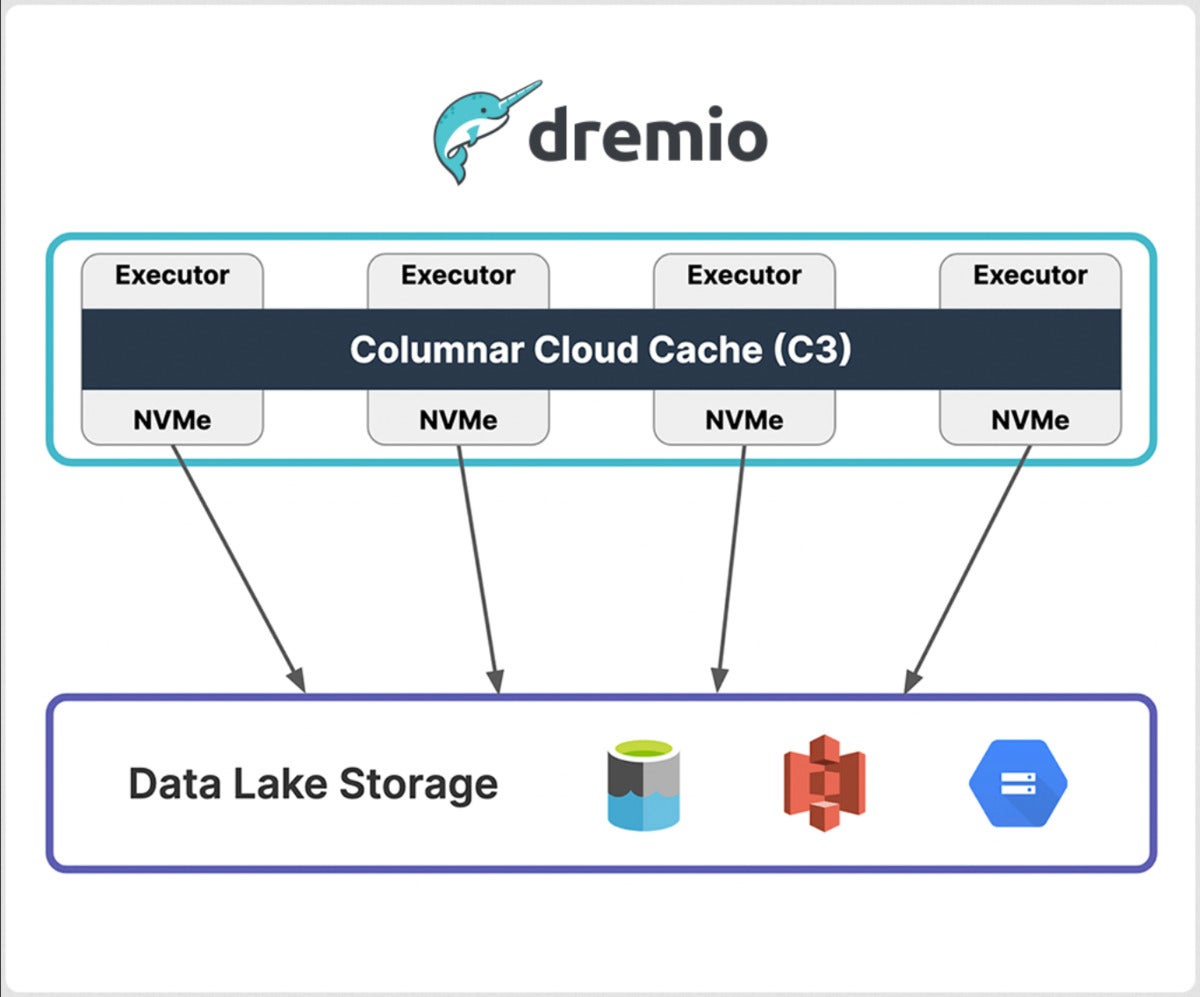

Google Cloud Storage

a subset of those columns and filter for data within a specific timeframe, then C3 will cache only that portion of your table. By selectively caching data, C3 likewise drastically minimizes cloud storage I/O expenses, which can make up 10%to 15% of the expenses for each query you run, according to Dremio. IDG Dremio’s Columnar Cloud Cache (C3 )function accelerates future questions by using the NVMe SSDs in cloud circumstances to cache information used by previous queries. Data Reflections Data Reflections allow sub-second BI questions and get rid of the need to create cubes and rollups prior to analysis. Information Reflections are information structures that smartly precompute aggregations and other operations on information, so you do not have to do intricate aggregations and drill-downs on the fly. Reflections are totally transparent to end users. Rather of linking to a specific materialization, users query the preferred tables and views and the Dremio optimizer selects the very best reflections to satisfy and speed up the query.Dremio Engines

Dremio features a multi-engine architecture, so you can create numerous right-sized, physically isolated engines for various workloads in your company. You can easily establish workload management guidelines to path queries to the engines you specify, so you’ll never ever need to stress again about intricate data science work avoiding an executive’s dashboard from packing. Aside from removing resource contention, engines can rapidly resize to take on work of any concurrency and throughput, and auto-stop when you’re not running inquiries. IDG Dremio Engines are basically scalable clusters of circumstances configured as administrators. Guidelines assist to dispatch inquiries to the wanted engines. Starting with Dremio Cloud

The

Dremio Cloud Beginning guide covers Including a data lake to a task; Producing a physical dataset from source data; Developing a virtual dataset;

Querying a virtual

dataset; and Speeding up an inquiry with a reflection. I won’t show you every step of the tutorial, because you can read it yourself and go through it in your own totally free account. Two vital points are that: A physical dataset(PDS)is a table representation of the data in your source. A PDS can not be modified by Dremio Cloud. The method to produce a physical dataset is to format a file or folder as a PDS. A virtual dataset(VDS)is a view derived from physical datasets or other virtual datasets. Virtual datasets are not copies of the information so they use very little memory and always show the present

state of the parent datasets they are stemmed from. Source